Why Proxies Matter in Modern Market Research

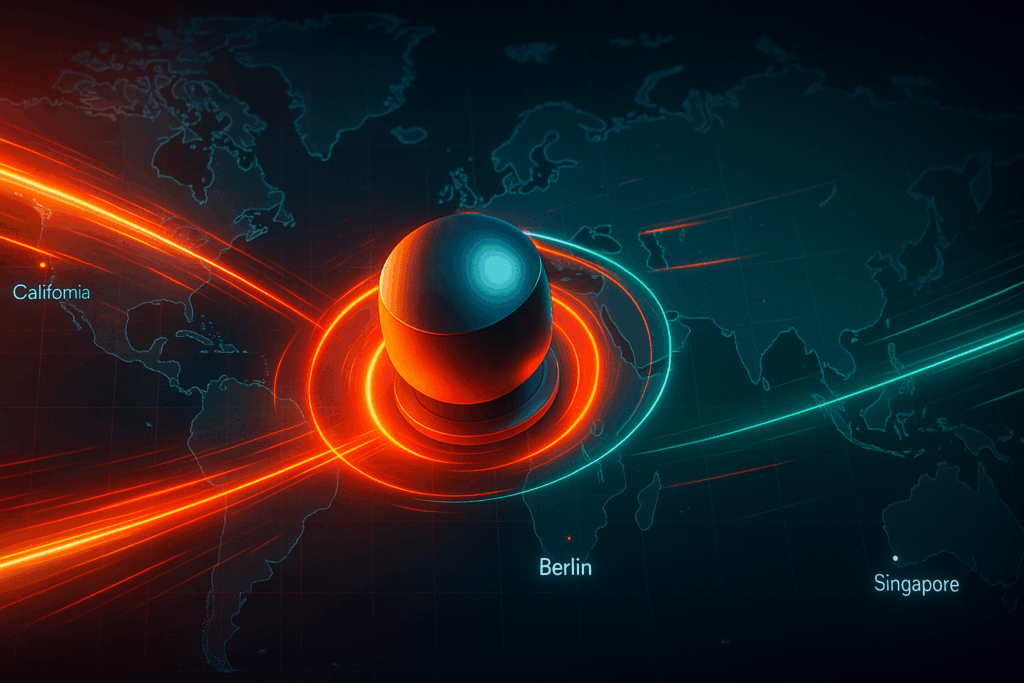

Picture this: your team is testing pricing strategies for a new product across three regions, California, Berlin, and Singapore. It sounds simple until your local IP shows those cities entirely different promotions and website versions. The data you gather? Biased. That’s where proxy IPs come in.

Proxy IPs act as a middle ground between your device and the website, masking your actual location. They make digital market research fair and geographically realistic. For marketing managers and startup founders, they level the playing field. Instead of checking international behavior through biased country-based filters, proxies allow repeated scanning of markets from the perspective of real local customers.

Every useful insight, competitive prices, regional preferences, and consumer trend timing starts with clean, locally accurate data. Without proxies, you make decisions from outdated or incomplete information. With them, you see how markets really respond.

How to Collect Global Market Data with Proxies

To study international markets, you need reliable ways to mimic a local visitor. Proxies achieve that by routing your web requests through a specific country or city address, giving websites the impression you’re onsite.

Example: let’s say you’re researching Colombian e‑commerce pricing for a client without flying down there. By routing requests through a Colombian proxy node, you can view local item listings, shipping fees, and promotional offers exactly as real customers do.

Mechanism behind it: when you query a distant server without using proxies, traces like Accept-Language headers, geolocation APIs, or server logs flag your non-regional activity. By masking this with a localized proxy node, those scripts see you as a region-matched user.

Below is a light example in Python for sending regional data requests through a proxy network:

import requests

# A rotating proxy endpoint from Torchlabs

proxy = {

'http': 'http://us_user:pass@proxy.torchlabs.xyz:8011',

'https': 'http://us_user:pass@proxy.torchlabs.xyz:8011'

}

headers = {'User-Agent': 'Mozilla/5.0'}

url = 'https://example-retail.com/catalogue'

response = requests.get(url, headers=headers, proxies=proxy, timeout=10)

print(response.status_code)

This sample connects through an American residential node while collecting catalog data that often differs by ZIP.

Why it works: caching data via global exit points allows faster feature comparisons, adjusts pricing parity studies, and expands usable inputs for machine learning forecasting models.

Still, proxy selection affects credibility. Rotating over different geo pools, say through an Torch Proxies’ premium residential plan versus basic ISP path, keeps scraping actions random enough to avoid rate limits or data gaps.

Takeaway: thoughtful proxy planning stops your datasets from reflecting your office’s default viewpoint. It creates truly global visibility without physical presence.

Competitor Analysis Using Proxies

Running stealth analysis becomes harder once competitors detect traffic spikes from obvious frames like Google Sheets integrators or unified data tools. Proxy IPs help researchers dodge those footprint patterns and avoid skewing returns.

Point: competitor benchmarking can expose pricing changes just hours after they roll out regionally. Proxy routing preserves neutrality while sampling public data.

Example: imagine running a small tool that monitors new arrivals from multiple regional app stores or grocery inventory endpoints. Instead of hammering with clear, single‑source IPs, use geographically rotating network exits, Asia first, then Europe.

Mechanism: the session rotation toggles the IP after every n requests. Tools like asyncio schedulers or proxy orchestration scripts schedule daily rollouts across local endpoints while respecting robots.txt and delay cues. With TurnKey DNS filters it suppresses browser redirect loops common in geo-walls.

Here’s a pseudo‑Python view of batch competitor monitoring over multi-IP logic:

locations = ['JP', 'DE', 'CA']

for c in locations:

yield_requests_via(c) # uses localized proxy packages

Data returns appear as locally gathered analytics, minimizing personal trace.

Sometimes you need direct visualization. Example: analyzing ad placements through verified US-only social feeds without skew from overseas filters. The more authentic the IP perspective, the more authentic the enrichment.

Still, remember ethics. Collecting open data is permitted, but never breach platforms’ terms or automate private sections; respect privacy boundaries. Proxy responsible use ensures compliance and longer-term reliability.

Takeaway: true competitor observation relies on anonymity, locality, and respect for limits. Proxies deliver the first two, discipline secures the third.

Choosing Reliable Proxy Provider for Global Data Access

Market data feeding offline models loses speed with each city hop you cross. Quality proxy vendors stitch decentralized networks into one API call. Providers today differ in bandwidth enforcement, node coverage, throttling ratios, and IP health trends.

Please note: whichever brand wins your allocation, confirm local jurisdictional adherence. Some zones structure regulation over mass surveillance versus open-data crawling.

Think of proxy quality using three vectors:

- Latency variance: ±100 ms baseline difference is tolerable; anything lagging above 400 ms bristles your timestamp alignment.

- IP credibility: blocked ASN percentages under 2 % show strong list hygiene.

- Node iteration window: longer windows waste parity syncing. Smoothing periods near one week find networks that refresh-sized IP batches every 48 hours to reduce drift.

End-decision? Choose extensibility over volume counts. In research life cycles, scaling monthly pool depths solves consistency before cost does. If you are approaching new integration tests, start smaller but optimize route logic under your chosen Torch Proxies command line tokens early.

Getting the Most Out of Proxies in Market Intelligence

Mastery with proxies balances convenience and constraint. A rotation misfire or hostname DNS-miss roughly around 03:00 UTC can neuter your daily collection logs.

For sustainable success:

- Polish request frequency syntax, verifying 120-second intervals per domain bunch.

- Blend data caching and fingerprint obfuscation only where authentication doesn’t break.

- Store server replies and anomaly statuses per route variant.

In operation, think series of experiments instead of indefinite logs. Keep IP schemes manageable within a version-controlled repository. Evaluate each run with isolated latency screens and per-region validity scripts. Done correctly, you build market datasets whose credibility invites executive faith.

So what next?

- Audit where location bias poisons visibility (check your next dataset’s regional variety counts).

- Onboard a small batch of rotating proxies from Torch Proxies, debug for week-stability.

- Document performance, response slippage, and block rate.

- Publish internal reports on a repeating monthly basis through each global district.

By mastering proxy control, researchers upgrade gut instincts into structured global sensitivity. And that is what turns data floods into insight streams.